The America’s Cup has always been at the forefront of technology, not only in the design of the boats but also in the technology of how the event is run and the races are shared with the viewer.

Back in the 1980s when the boats were in displacement mode, Computational Fluid Dynamics was beginning to be used to find the fastest hull and sail shapes. At about that same time, we were introduced to graphical representations of the boats and the racecourse in the event broadcast.

In AC34 we saw a big jump in the technology of the boats when they started foiling. But we also saw a big jump in race management with robotic marks whose positions were autonomously controlled by a race management program, and electronic umpiring where boat positions were reviewed using highly accurate positional data to make better real-time calls. This was the first time that any sport had been officiated using electronic positional data.

At AC34, we were also introduced to a massive step up in broadcast technology with “Liveline” where augmented reality graphics like gridlines were superimposed over the live video feed. For this technology, the Liveline team received an Emmy award being the first to have AR Graphics superimposed over images from cameras that were not in fixed positions as they were mounted on helicopters.

Now for America’s Cup 37, Capgemini and the America’s Cup Media revealed WindSight IQTM , the product innovation they would bring to the broadcast of the Louis Vuitton 37th America’s Cup (AC37), as part of their global partnership.

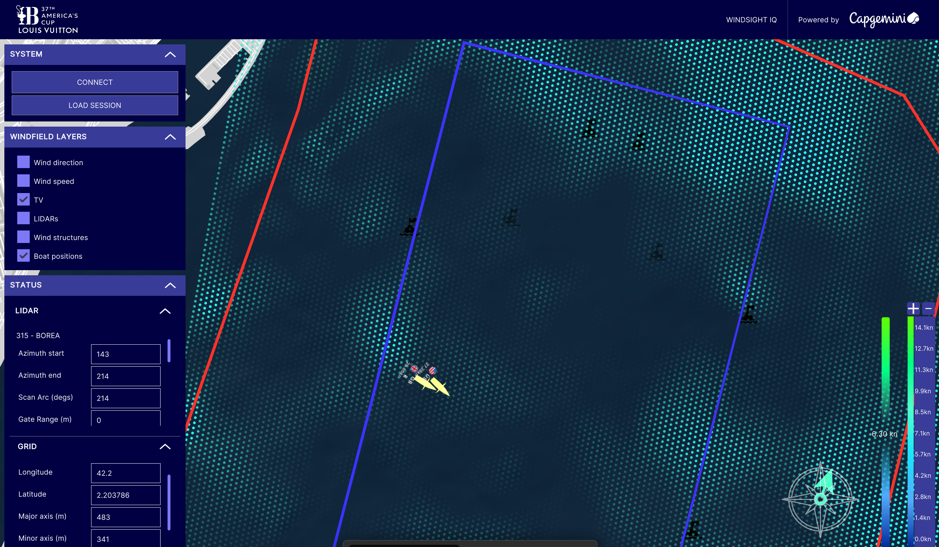

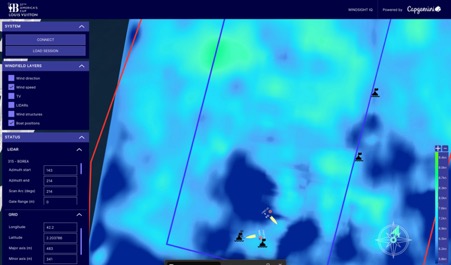

WindSight IQ enables the broadcast team to show viewers a graphical overlay of the wind superimposed over real time video images of the racecourse.

Furthermore, WindSight IQ enables a yacht simulator to take the wind data and calculate the optimal routes around the course, enabling commentators and viewers to predict what teams on the water should do or analyze how teams could have optimized their path.

The Development of WindSight IQ

The wind is one of key elements that can determine the results of an America’s Cup race. With boats reaching speeds up to four times the speed of the wind, small differences in windspeed can make big differences in boat speed. Due to the technical challenge, no one has ever before been able to demonstrate real-time visualization of the wind.

AC Media was looking for a willing partner to take on this technical challenge and found it in Capgemini. The partnership was born. Capgemini has a strong track record of adding value to the fan experience and sporting performance such as through its sponsorships, at the 2023 Ryder Cup where Capgemini provided Outcome IQ.

The core challenge of this innovation lies in combining engineering and data visualisation; accurately capturing the intricate patterns of the wind and portraying it in a way that fans and commentators can easily see and understand. As a key factor in winning or losing, the wind patterns that are revealed and visualized with WindSight IQ allow the broadcast team to show the wind on the racecourse so that they can better understand the gains and losses of the boats at any given time.

Bringing WindSight IQ to the America’s Cup

Technology is changing the way that we consume sport. Viewing habits are changing, a recent study by the Capgemini Research Institute (A Whole New Ball Game – Why Sports Tech is a Game Changer, Capgemini Research Institute, June 2023) found that 84 percent of fans say emerging technologies have enhanced their overall viewing experience. With 77 percent of Gen Z and 75 percent of Millennials preferring to watch sport outside of live venues, the viewing experience on screen is more important than ever.

WindSight IQ enables commentators and viewers to have more data and insight into the wind on the racecourse. In fact, more than is available to the competing teams on the water. Being able to see the unseen wind and compare teams’ actual performances and tactical decisions to the optimum routes, will mean audiences can follow and engage in the racing on a whole new level.

The Magic Behind the Technology

Through technological innovation, combining laser sensor technology with advanced engineering and new visualization techniques, WindSight IQ brings together the digital and physical worlds.

Combining its expertise in technology, engineering, data and design, Capgemini and America’s Cup Media use Doppler LiDAR devices to collect the raw wind data. Sensor fusion and scientific computation then convert the data to real time graphics to reveal the wind in augmented reality (AR) and virtual reality (VR) images.

The wind field data is also fed to a yacht simulator program to create a live “ghost boat” simulation that can also be projected onto the racecourse in another AR and VR graphics layer. Using the WindSight IQ data, the ghost boat simulator can show the optimum path crews could take given the measured variations in wind speed and direction.

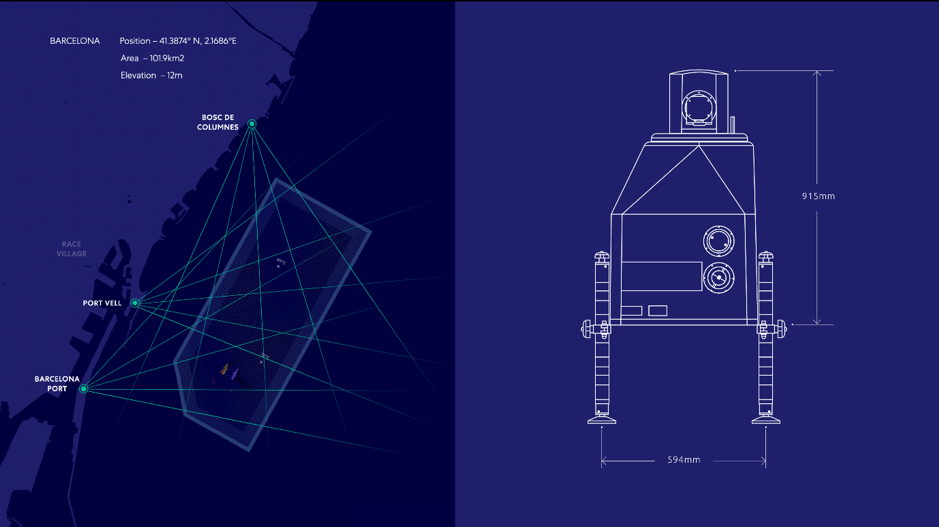

To accurately collect raw wind data, three Halo Photonics LiDARs units have been precisely positioned along the Barcelona waterfront to scan the entire race area. Each LiDAR has an average 6.5km scanning range up to a maximum of 12km, and measures wind speeds, every 1.5 meters in the scanned area. The units can detect wind from 0 to 73 knots with an accuracy of +/-0.2 knots.

WindSight IQ can supplement the LiDARs data with wind data directly from the racing yachts and buoys’ wind sensors. All these data streams are fused using innovative algorithms to enable a wind field to be created over the entire racecourse. The wind field output is refreshed every second based on the sensor measurements and predictive wind models.

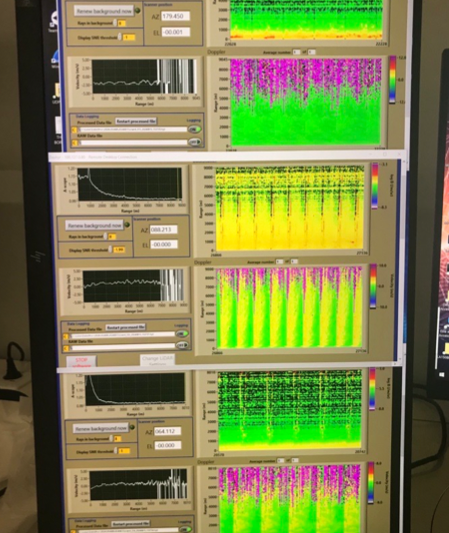

Doing this is easier said than done. It requires vast computation power to interpret the signals and then convert them into a 10m resolution 30km² wind map, which is then converted into graphics that can be overlaid onto the broadcast stream. Hardware limitations mean that balancing range, refresh rate and resolution is all part of the operator’s job that must take place in real-time. The calculations are done on a bank of computers that lie in the AC Media production room to output the wind field and then the 3D graphics that are overlaid onto the video feed of the racecourse that the viewer sees.

Application of the Ghost Boat

A core part of the collaboration with America’s Cup Media was enabling a new broadcast feature known as the “Ghost Boat.” This combines existing AC75 simulator performance algorithms with the wind data from WindSight IQ and the performance polars from the race yachts to determine the optimal path to get to the next mark. This path is then displayed on the screen. Additionally, after the race, the path of each boat can be compared to the most efficient route of the Ghost Boat to see how far off they are from optimal.

WindSight IQ is not visible to the teams competing on the water. The teams are not given access to the real-time data mainly because it would so dramatically change the way the race is sailed. Currently, assessing the strength and direction of the wind and as a result, the path taken to the next mark is determined by the skill of the sailors.

Having access to the WindSight IQ data would remove this difference in skill and detract from the core essence of the competition. Of course, the team members not on the race yachts will have the same access to the feed as the viewers, but RRSAC 41 prevents this, or any other, information from being transferred to the race yachts while racing.

Windsight IQ Now and into the Future

WindsightIQ’s current capabilities are being shown extensively in the AC37 broadcasts, calculating and displaying the varying wind strength and direction across the racecourse. Commentators are enthusiastically incorporating the wind field into their narrative and explaining why the boats are fighting for one area of the course or how one boat was able to pass another.

There have been multiple suggestions on how the technology could be further used to explain what is going on. Usually, it includes displaying the turbulent air coming off the sails of the yachts. Currently, the spatial resolution and LiDAR sampling and scanning rate cannot support such graphics, but with the addition of more LiDARs or improved hardware this will be possible in the future.

Once again WindSight IQ is an example of how the America’s Cup and Capgemini are leading the charge in sports broadcast graphics. It is easy to imagine how the technology could be used in other sports. The data captured by WindSight IQ paves the way for AI-enabled weather forecasting for localized areas, which could be applied to virtually any sport where the wind is a factor.

For example, in golf, if the broadcast team could display what the wind is doing on a given hole. It could enable the commentators to explain unexpected changes in the path of any given shot. Or perhaps for the NFL the broadcast could show the wind swirl inside the stadium to help explain the behaviour of a ball as it travels through the air.

There are also some promising applications in industry where this technology could be used. In the emerging urban air vehicle/taxi area there is a need for precision landing in potentially complex wind environments where conventional means of detecting dangerous wind effects such as wind shear are not sufficient This technology could also let airport traffic controllers directly see the wake turbulence on the runway, potentially allowing planes to take off or land sooner than current separation rules would allow.

There are obviously other applications in the wind energy industry, enabling better placement and safer construction of wind turbines. There are also less obvious use cases where understanding the localized wind field is vital – helping to fight wildfires for example, or monitoring pollution or other harmful emissions.

Mike Martin, a Rolex Yachtsman of the Year and world champion sailor is the Director, Head of Automation & Robotics, Synapse Product Development and Keith Williams is Capgemini’s Chief Engineer for WindSight IQ